One of the most enjoyable days so far today, as the planned Power Query WOW webpage scraping wasn't working as expected, so another had to be found quickly, and TL;DR no-one was entirely sure exactly how to get all the pages in properly. It was a set of baseball figures, one webpage per year each coming from a URL taking the form of https://www.base.ball-reference.com/leagues/majors/YYYY-standings.shtml, with YYYY needing to be replaced by each year from 2000 to 2020.

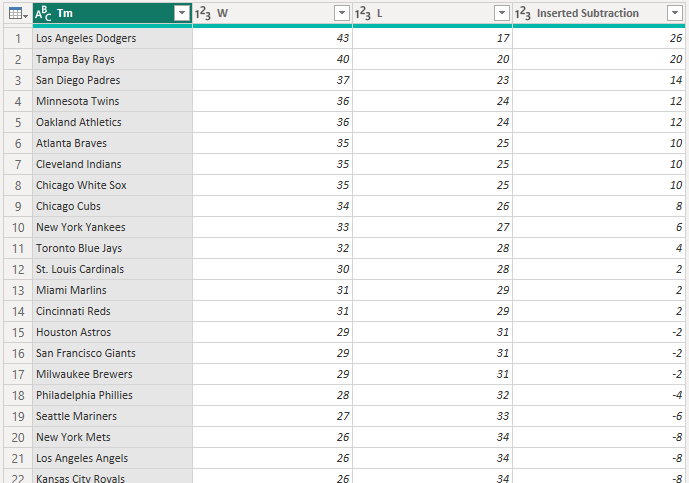

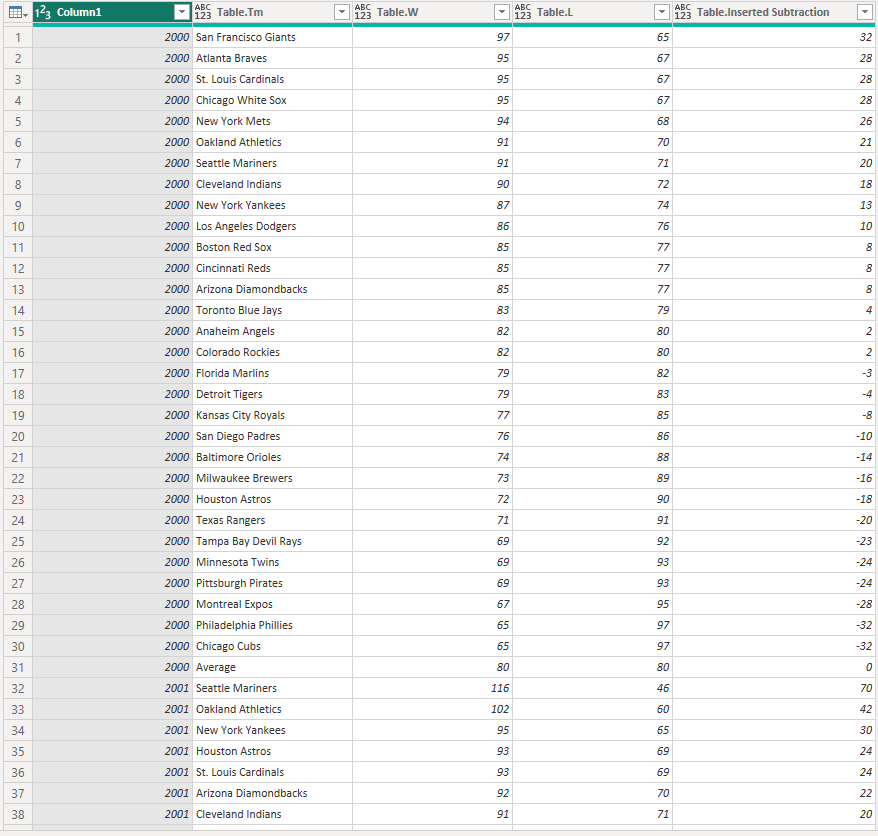

Getting the data from one webpage was easy - 2000-standings.shtml loaded into Power BI with no issues, and gave us exactly the table we needed, with one row for each of 30 teams. Cleaning the data was also easy, just needing to delete most of the columns and add a quick calculation.

But how, then, to get the other twenty years in? And although, as a relative newcomer to Power Query and its related functions and code, it seemed like the easiest option might be just to load them in manually and append the tables together - there were only twenty, wouldn't take too long - it also seemed to me like there really ought to be a better way, and as it turned out, there is!

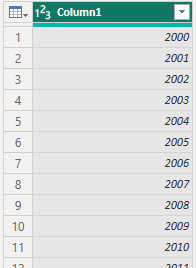

Step one was to create a list of years. This was fairly easy: create a new query with the code "= {2000..2020}", and convert that to a table:

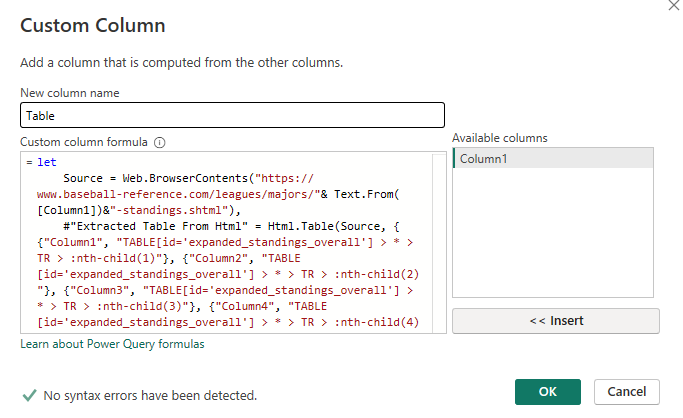

The next step was to load in, essentially, a copy of the first table in each of those rows, but with the URL changed each time, from "2000" to whatever the corresponding value in Column1 was. And how to do that? Lots of code! Use the Advanced Editor feature to obtain all the Power Query code required to generate the table above, and plug it into the Custom Column function:

The one change required was to remove the "2020" from the URL and replace it with a reference to Column 1, by splitting the URL string and plugging that in the middle. I also used the Text.From function to prevent the fact that it was a numeric field being a problem.

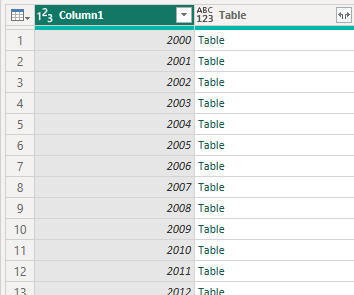

This gave me a column full of tables as required, as seen previously after many a Merge function, and expanding those gave exactly what I wanted: one row per team per year, with all the previous cleaning carried through:

There was still a bit more to go - some teams had changed names over the years, some had stopped existing altogether - but overall, it was a fairly simple, and definitely very logical, process, and hopefully the logic I followed going through it (and then guiding others in my cohort through it) will give me a bit of instinct with other similar situations in future!