If you're looking to scrape the web for several pages with almost identical nomenclature, you can do so very easily using the following steps in Alteryx. In this example, we'll be using a standard URL that simply differs by an ascending digit each time. However, this can be done with any list that you may need and can realistically generate within the software.

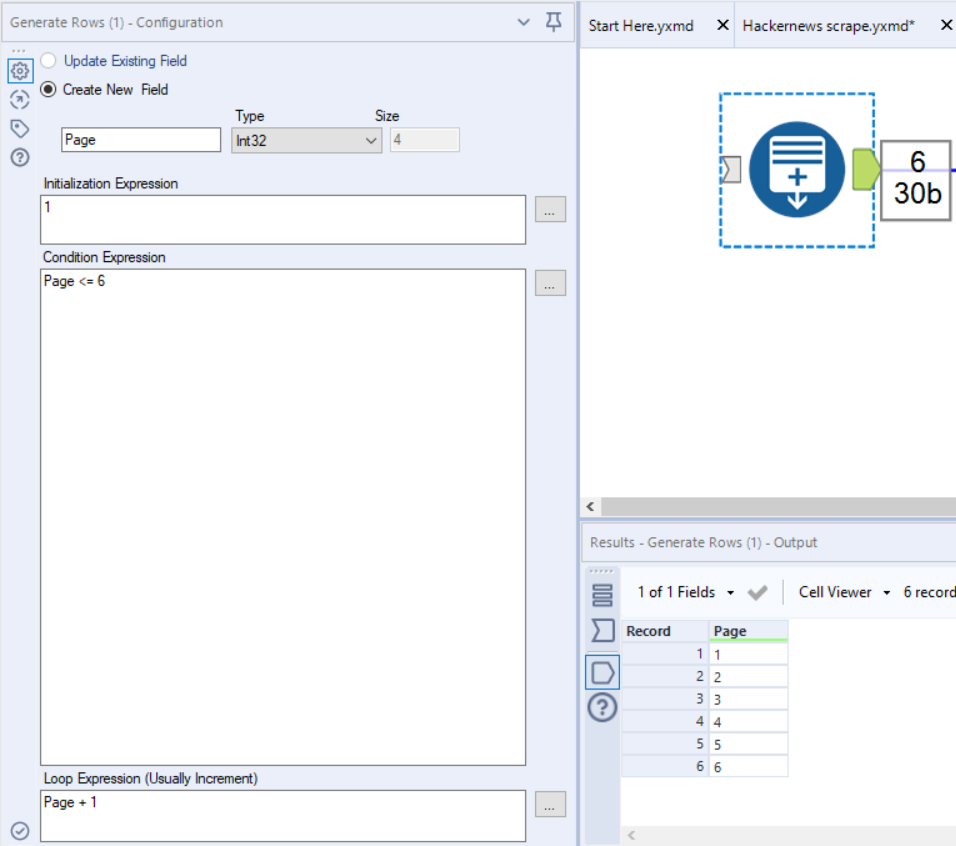

First of all, we'll generate a number of rows to actually produce the list - the contents of these will eventually become part of the URL. In this instance, we're looking at 6.

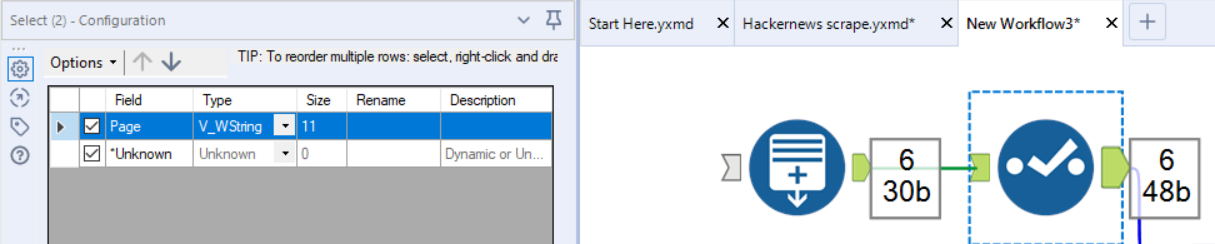

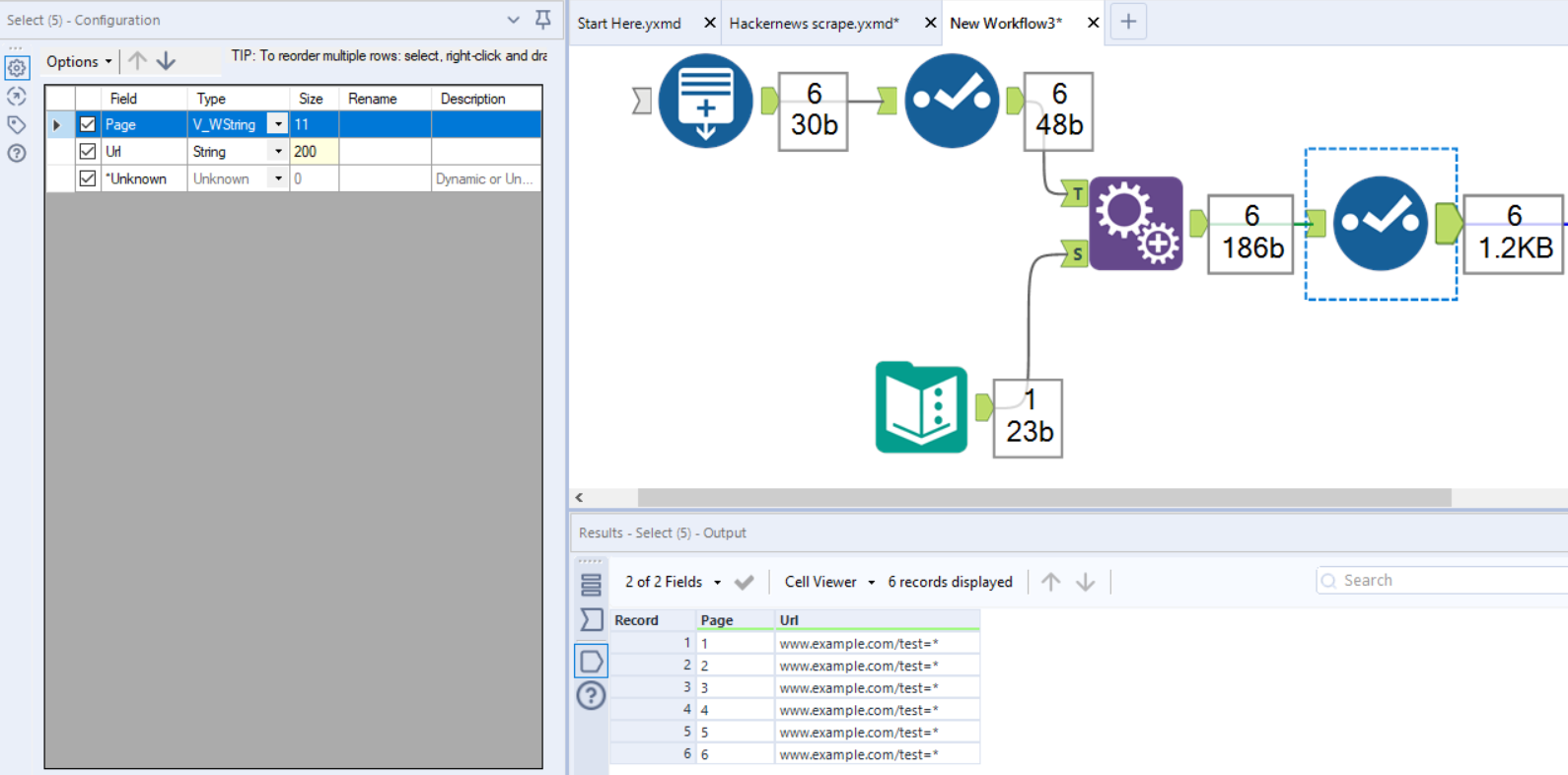

Next, we'll simply convert these generated values to a string so that they can be used in our formula later. This can be done at a later stage but I've just placed my select tool second.

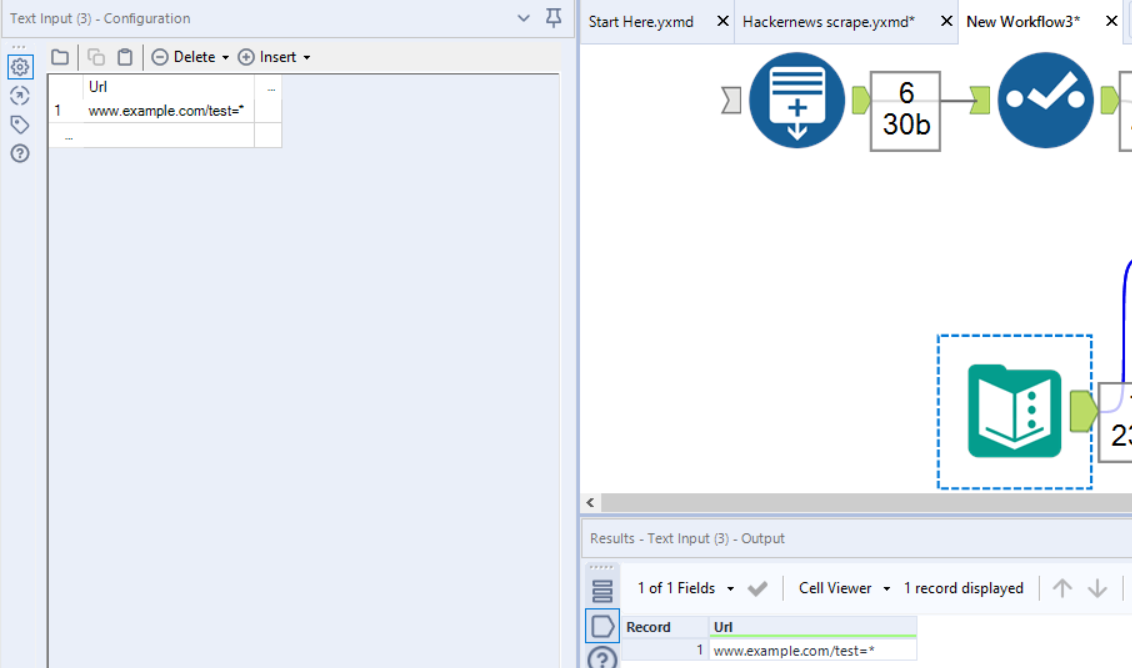

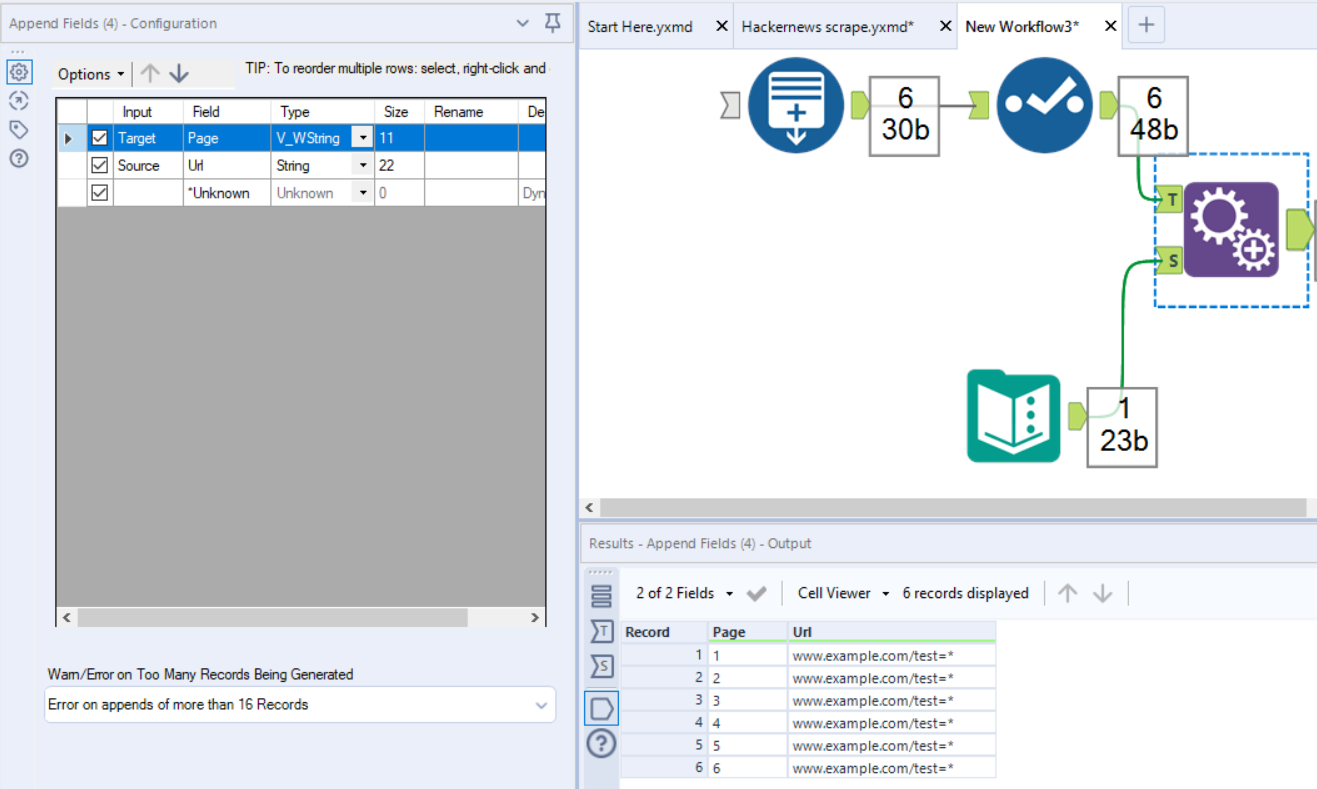

Third up, we want to append these fields to that of our core URL so we can add them into our formula. I've just plugged a dummy address into the text input for the sake of this demonstration, but this is where you'd insert your website of interest.

Now, in this scenario, the output of our append field has a field size of 22. This means that when we create our final target URL, if it is longer than 22 characters, it will be truncated and cut off at that limit. Therefore, we need to extend this. As an arbitrary number, I've just decided to be safe and increased the limit to 200 characters.

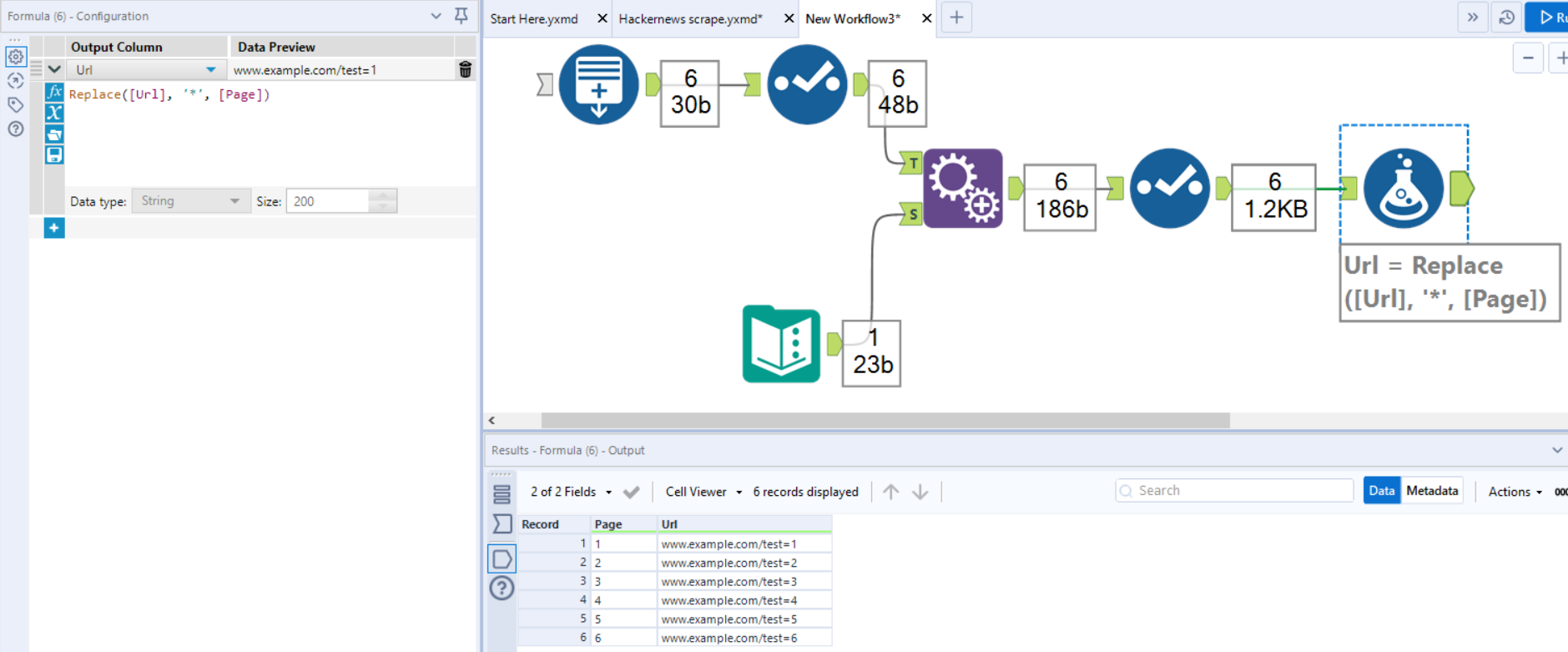

For the penultimate step, we need to chuck our generated page numbers into our URL as discussed earlier. To do that we'll just use a simple formula tool. As I used '*' as a placeholder in my dummy field, this is the target for replacement, and we're obviously putting our page IDs that we generated at the beginning in its place.

Now, you have a bunch of URLs ready to go. As the title of this blog suggests, a sensible use case for this is if you have a bunch of web pages that you want to pull data from, and so you'd likely want to connect a download tool next that points towards your generated values. If you want to take things further, you can even build on some extra features and turn this into an app, where users can select what kind of values and increments they want in their generated fields!