The first week at the data school saw DS40 wrap their heads around Tableau Prep.

When we're released into the real world in 4 months time - and work with real clients 🤯 - we will very rarely be given a data set which allows us to immediately build a visualization. There are several bits of software we can use to help manipulate data into a helpful format, and this week we learnt the basics of Tableau Prep.

Headlines

Cleaning Data

To me, this is the easiest part of prepping data. Cleaning data includes splitting fields, removing rows and / or columns, correcting data types and grouping rows - all tasks which Tableau Prep make easy. This stage can also include manipulating strings to make them easier to work with by removing numbers or punctuation, making the case consistent and trimming white space.

Joins and Unions

Often, data will be spread across more than one table. Joins and unions allow us to bring these tables together into one useful dataset. The way I understand these two concepts is:

- Different columns -> JOIN brings the columns together into one table and the type of join controls how many rows we have

- Same / similar columns -> UNION stacks the rows on top of each other

Pivots

Computers think differently to people. This means the way we structure a table of data might not work for a computer. Pivoting is all about swapping columns and rows to create a data set a computer can work with. Columns to rows is the more common (and easier!) pivot, but pivoting rows to columns might also be required.

Aggregation

Aggregating functions can do lots of different things. They can change the granularity of the rows by grouping them together and aggregating the values along the rows - this might be a sum, average or another similar function - or they can be used to remove duplicate rows. Shout out to Ed from DS38 for showing me this method!

Preppin' Data

Jenny and Carl set us some Preppin' Data challenges so we could practice applying the theory we'd learnt in Tableau Prep. The challenges started off easy but quickly ramped up in difficulty. I really enjoyed the problem solving aspect of these tasks, even if some of my solutions were not very performant. There are tons of challenges dating back to 2019 along with a participation tracker so this will be a really useful resource to get some practice in.

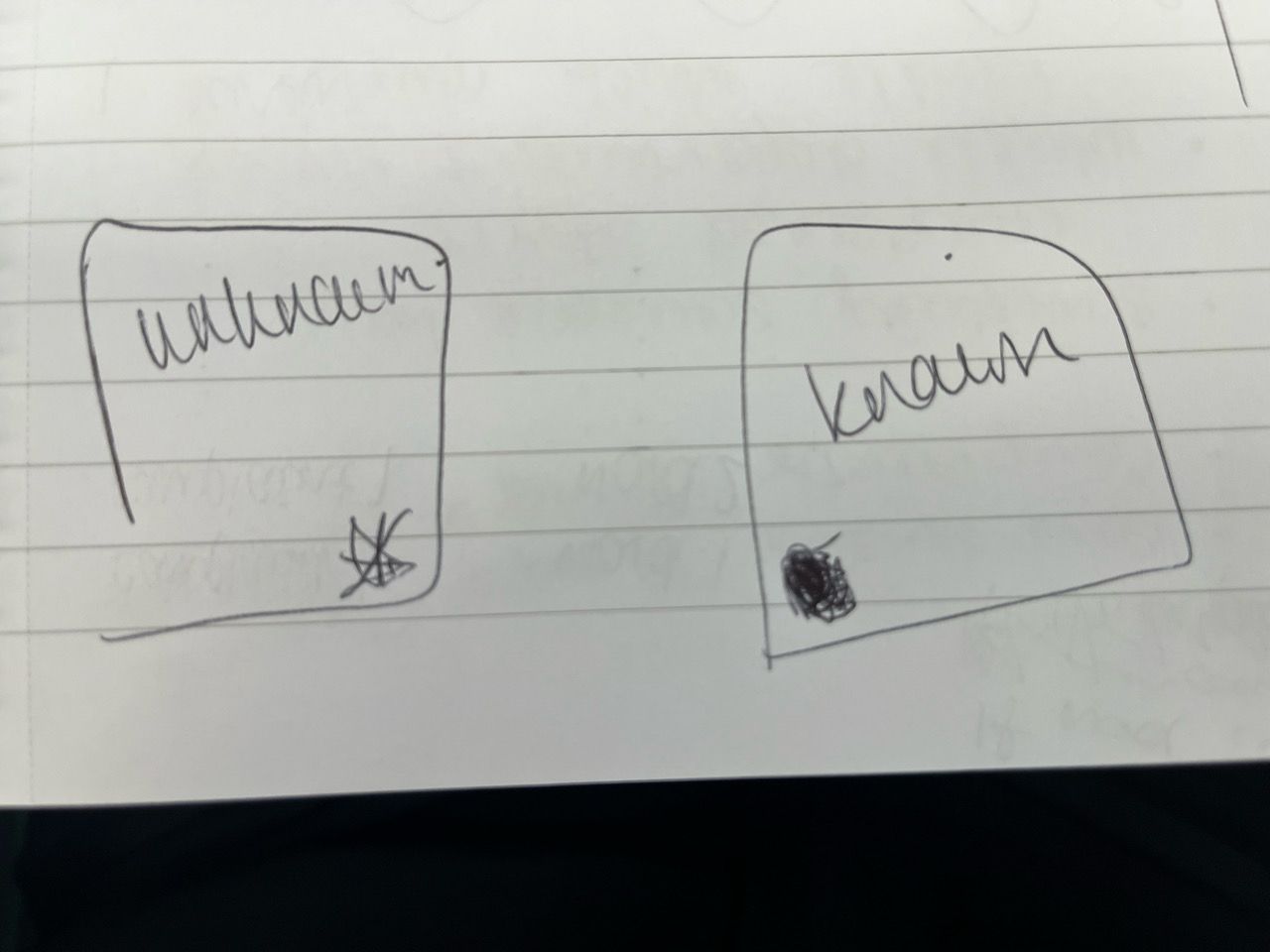

I think the most important thing I learnt from working on the challenges is to always make a plan of how I'm going to tackle the problem. The first step is to look at and understand the data you've been given. I then would think about what I wanted my end result to be and sketched out how I wanted my tables to look at each step. Making a sketch of how I wanted my joins to work was also really useful. I guess this planning process will be different for everyone - I'm pretty sure nobody will know what I meant by the below, but it really helped me!

Project

This is DS40s first time working on a project, and our first presentations are this afternoon. Wish us luck!

I was paired with Ollie this week to present on joins and unions. We've worked well together so far and have listened to each others feedback on how to improve our sections of the talk. This experience has been really useful for my understanding on the topic, since Ollie thinks about joins and unions in a spatial way, whereas to me its all about the columns.

Joins and unions tips and tricks

- You can join 2 tables at one time

- You can union up to 10 tables at one time

- To join on columns based on whether a substring is contained in another string, you'll need to create a dummy column of 1s in each table and join the two tables together. This will create loads of rows but don't worry! After the join, you can then create a calculated field which determines if the substring is contained in the string. Then all you need to do is filter out the rows where the strings don't match up et voila!

I'm super excited to see what everyone else in DS40 brings, and also what DS39 have been working on.