In this blog I am going to run through how I used webscraping to find Manchester United's all time top 25 goal scorers. I am not going to lie, this was tough and took a long time to get to the finished product. Webscraping is essentially the task of extracting specific data from websites that you want to work with. It seems daunting and tedious, but if you follow some simple steps it works effortlessly... hopefully!

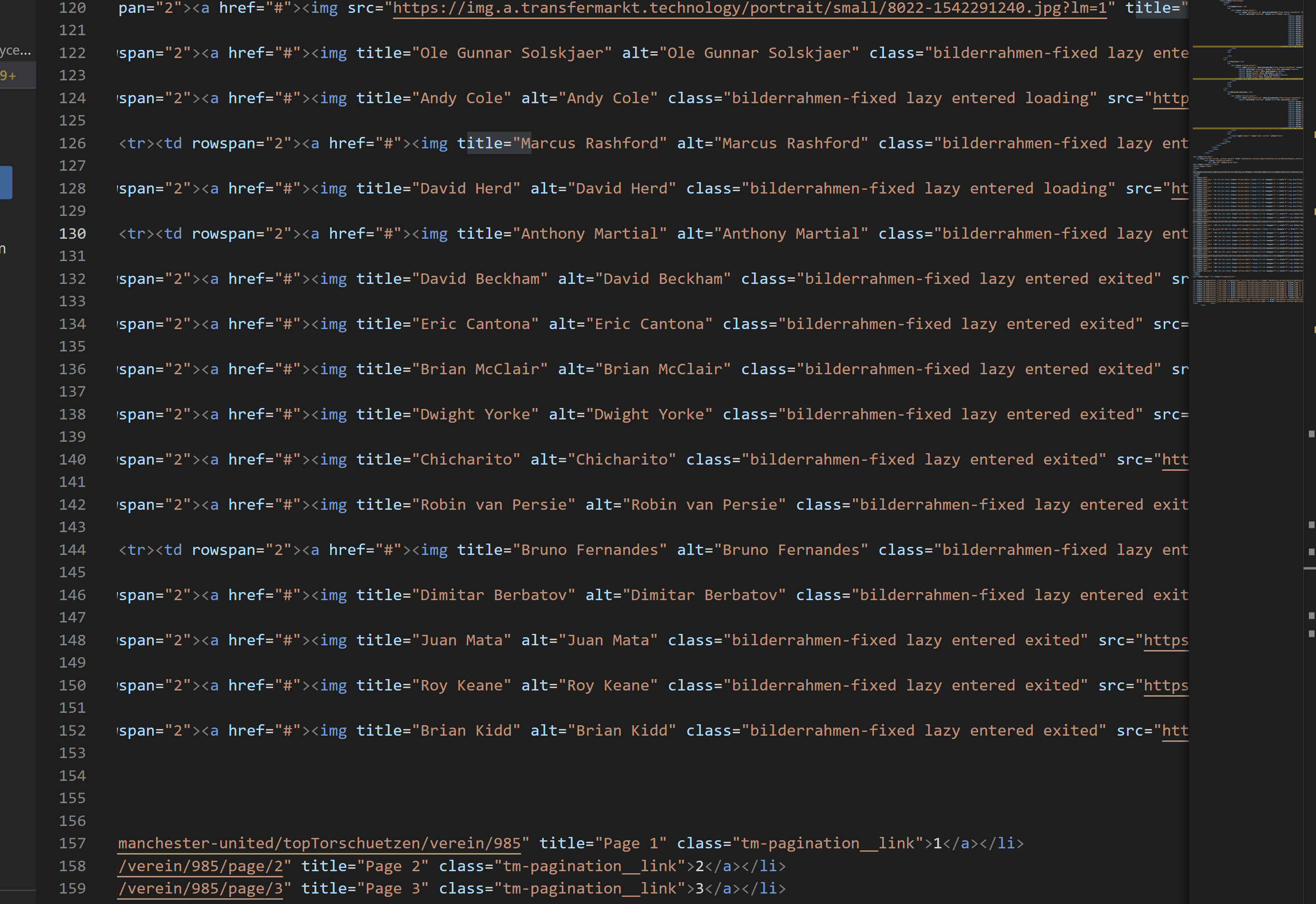

To start I found a suitable website to webscrape from. A simple Google search led me to one that gave me all the information I needed. From here I needed to inspect the website to see what specific information I needed to pull. To do this I used 'Ctrl, Shift, I' to inspect the website. Unfortunately the site I chose was translated from German so some of the fields were hard to pick out. Once I found the part of the site I wanted to pull the code from, I copied this over to Visual Studio Code to have a further in-dept look.

This was a difficult set of code to work with and it involved a LOT of Regex and cleaning in order to get it into a clean table. There are many different ways to do this but I found this way suited me the most.

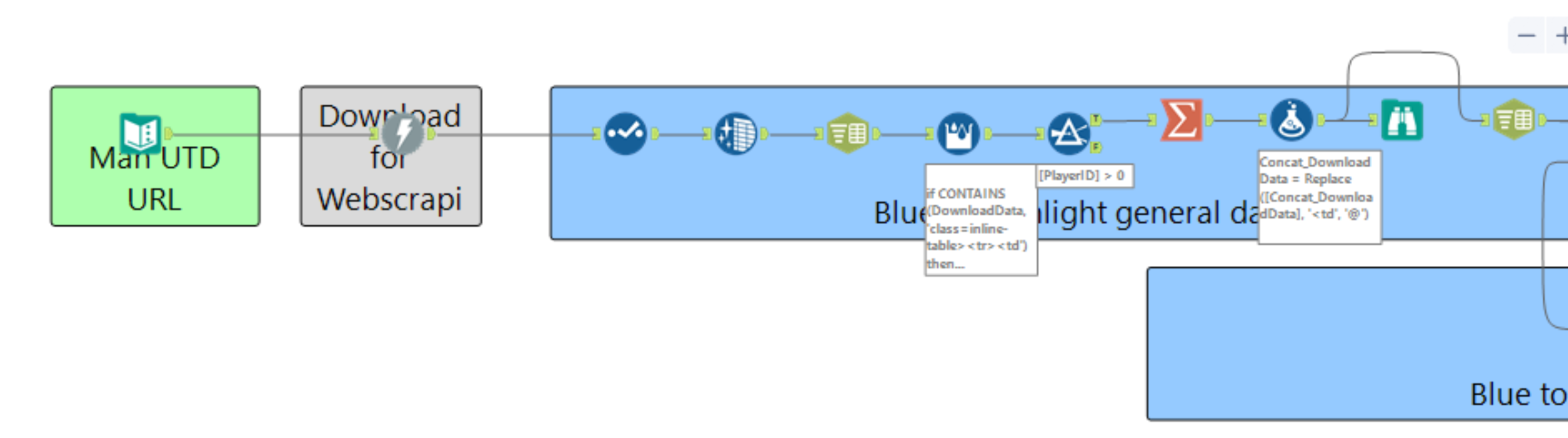

I built a workflow which inputted the website URL and put a download tool behind it. I also needed to add in a 'User-Agent' into the Headers of my download tool as the website I was scraping required a chrome connection in order to work. From here I played around with the data, cleansing out all of the whitespace and line breaks so that I could break it up into different rows.

Once my data was in different rows I had to figure out what common section delimiter was within the data. I needed these so that I could assign each player a Player ID later on. After I found it I used a multi-row formula to assign my player ID's :

if CONTAINS(DownloadData, 'class=inline-table><td') then [Row-1:PlayerID]+1 elseif CONTAINS(DownloadData, '</div') then 0

else [Row-1:PlayerID] endif

I then put in a filter to remove all rows with a Null ID. From here, it got tricky. I wanted each Player to have their own row so I grouped by the Player ID and Concatenated the Download Data. I noticed that I could not simply split the player info by a generic delimiter due to the code used. I needed to replace '<td' with something that was not in the code - I used @. Now I could start to split the player info into different columns using @ as my delimiter.

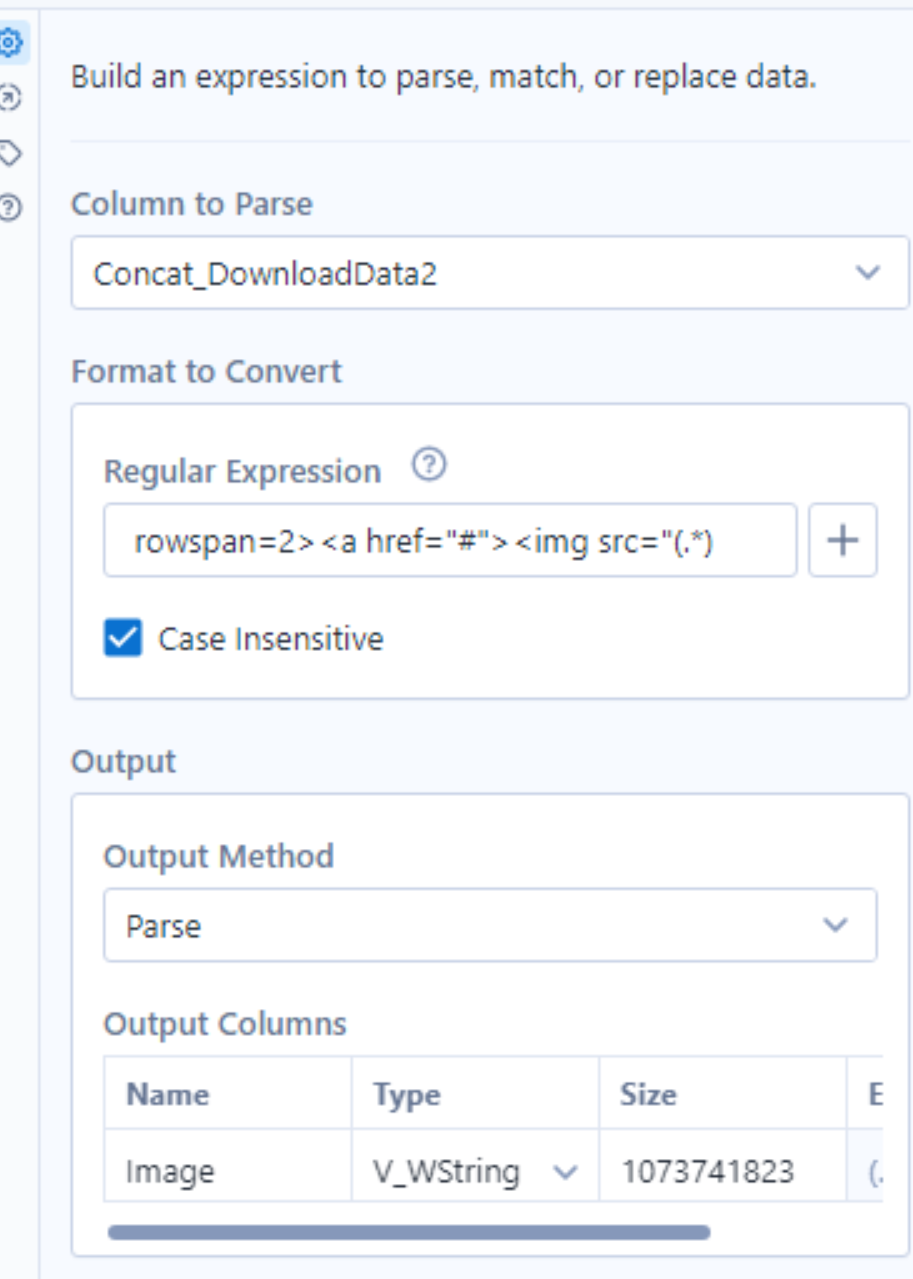

All that was left to do was to use Regex to Parse out the Player Stats I needed and rename the Headers Accordingly.

Some of the Regex used included:

I was left with a nice and clean table that I can now bring into Tableau and make a viz with!