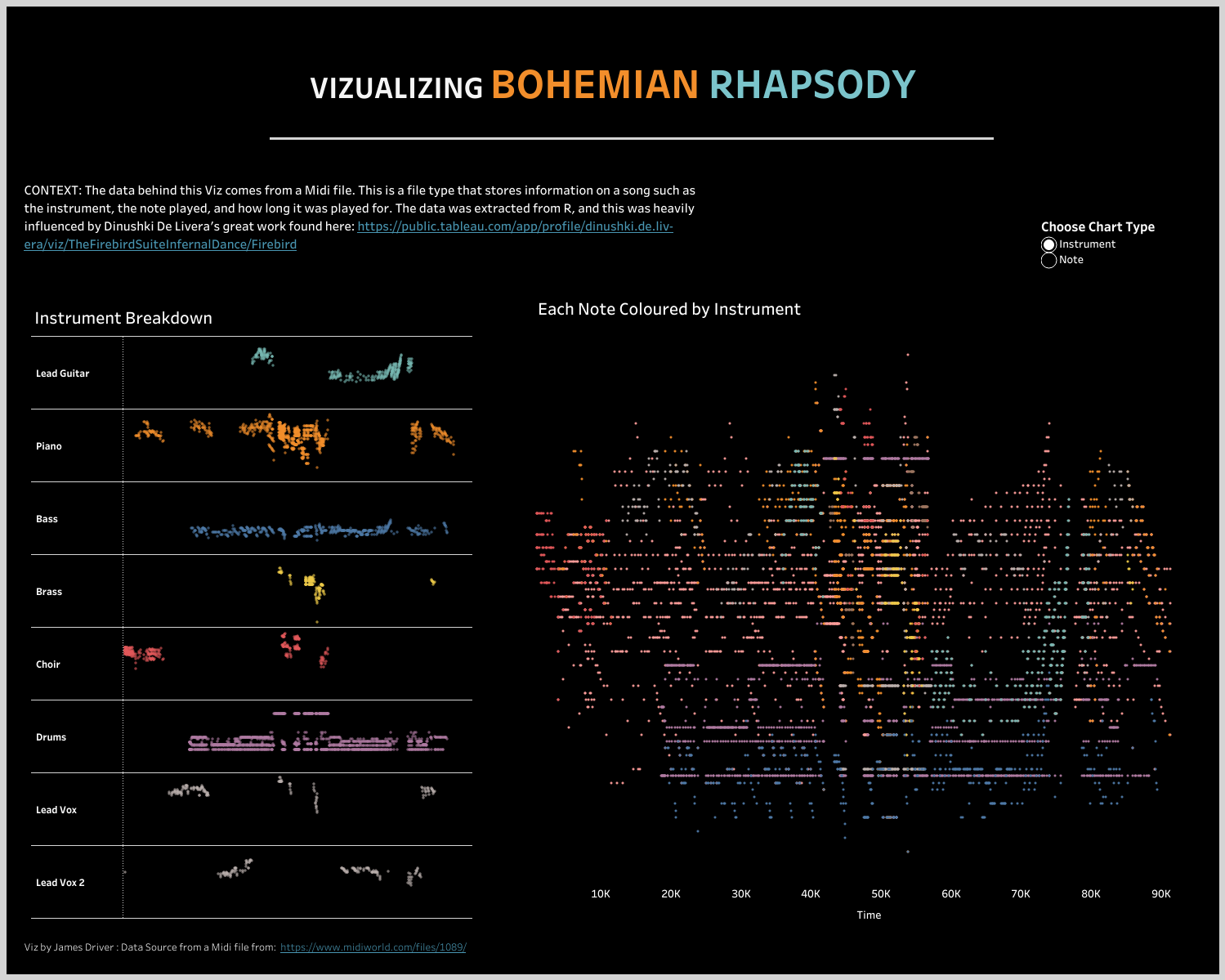

What is Midi data? A Midi file contains all of the data behind music, such as; the notes played, what time they were played at, and what instruments were used. A lot of this project was influenced by Dinushki De Livera's work found here: https://www.herdata.net/post/translating-music-into-data, however I will add some more details around a couple of problems I came across when working with this data type. If you want to know what it looks like, here is a viz I created using a Midi file:

It looks like a lot of information, but this is breaking down every note played in the song Bohemian Rhapsody and what order it was played it. We can also see when each instrument comes in, and the most frequently played keys.

Getting the Data: Midi data is available from lots of different places and I found my files from here: https://www.midiworld.com/files/. The data is all free to download as well (watch for copywrite issues when playing them in a public forum). Once you have downloaded your Midi data, simply save it into a folder. The next step is the most complicated one as it will involve downloading R Studio which can be a bit tricky to work with. R Studio is a better tool to work with rather than R terminal however (as I found out the hard way), and it is all free to download.

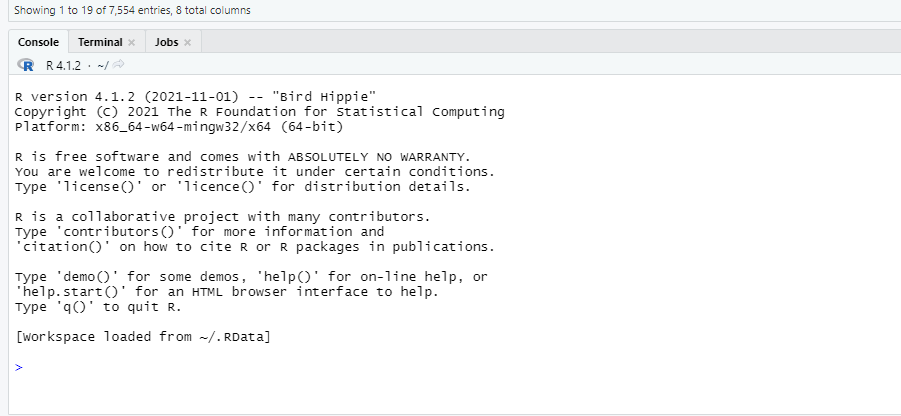

This is the console space we will be working in, and the next part will involve a bit of copy and paste. Paste these lines below into your console (paste one line at a time and hit enter on each line):

install.packages("tuneR",repos="http://cran.r-project.org")

library(tuneR)

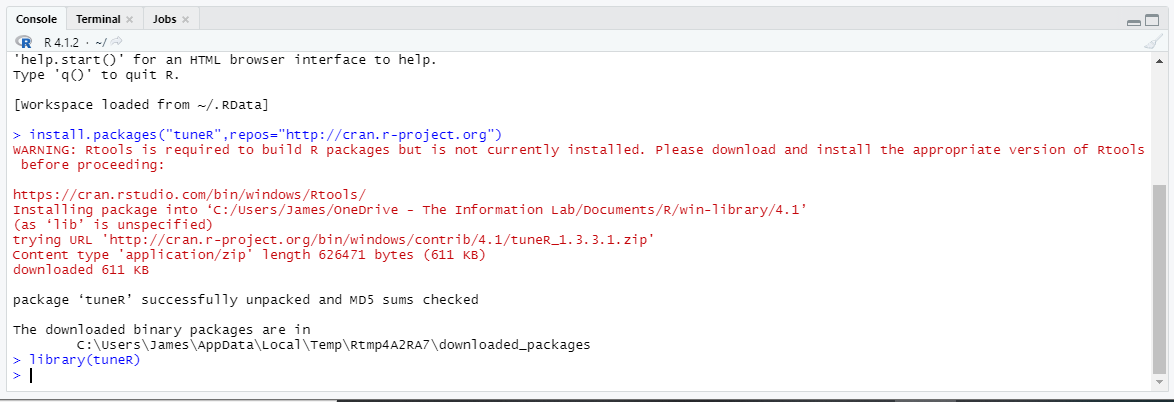

You should now see this appear on your console:

Don't worry about the red messaging as we have now downloaded and installed the packaged software that will extract the Midi data for us. The next steps will bring the data into R and then push them into a text format for us to read.

snap<-readMidi("Location of your file.mid")

snap2<-getMidiNotes(snap)

For the first line, remember to wrap the file location in double quotes and keep the .mid at the end. The easiest way of avoiding problems when pointing to the file location is to copy the file address in file explorer and paste it into R Studio (make sure you are using / to separate the folder locations in Windows rather than the default \).

write.table(snap,"Output location.txt",sep="\t",row.names=FALSE)

write.table(snap2,"Output location.txt",sep="\t",row.names=FALSE)

This is where we are telling R to save our text file with the data. Again, remember to wrap the location in double quotes and end with .txt to output it as a text file.

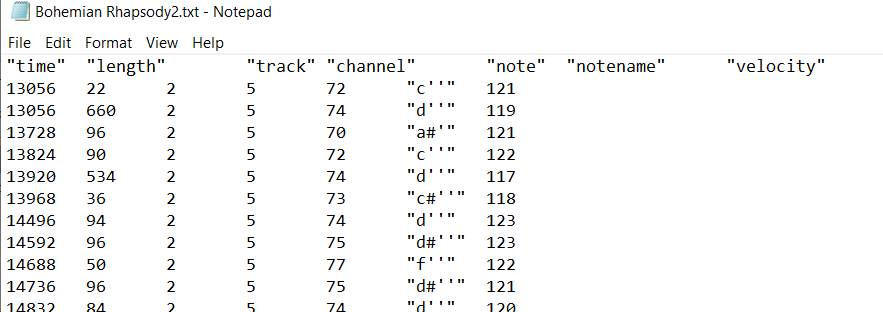

We should now have two text files, one of which will look like this. We can begin to see the data and are now able to get this ready to import into Tableau.

What does the data mean: Midi data can throw us some challenges when working with it as it is not designed to be read in this format, rather it is meant to communicate with playback software. Here our a few of the important parts:

Time: This one is the hardest to understand as it is a Midi time format (1 = 1 Midi Clock). Midi time is measured in beats and normally at a rate of 24 beats per note, however, this is not always the case and for the sake of this blog we can take the Midi time as the length of the song. (If you have any insights in calculating Midi time in seconds then drop me a message).

Note: Anyone familiar with music theory will recognize this one, as it represents the note played at each point in the track. The midi time next to the note will let us know when it was played.

Length: This is how long each note was played for. It is a useful piece of information as it saves us having to calculate this ourselves with a join between the two data sets.

Track: This is how we can pull in our instrument data. Each track will represent a specific instrument, broken down by each note that the instrument played and when it played it. We can use a lookup against our first text file, as each track will contain a label that defines the instrument. The field we want to run the lookup against is called parameterMetaSystem from the first text output. Note that sometimes the instrument will appear as unknow in the Midi data. The only workaround I have found so far is to listen to the song and determine the instrument based on the timing it was played at.

Here is an example of a quick join we can run in Alteryx to match up the data (this could also be done in Excel with a quick lookup).

Velocity: This is the last one that you might want to explore as it represents how hard a note was struck. This will sit between a scale of 0-127, with 127 being the hardest.

Now that we know which fields to look at, we can clean up the text files and join then together with whatever software you want to use. (I like to automate Alteryx to read in the text files as a .csv with a Tab as the delimiter, as each Midi file will follow a similar format). We can now bring this data into Tableau Desktop to explore. We have our key fields ready to go so message me if you want any further ideas on how to work with them.

The data is free to explore and can bring in some interesting analysis that explores music in the visual medium. As a music fan, I think this is super helpful when trying to understand a song, and can help break down a songs structure into different parts. If you want to see the viz that inspired this passion project, then check out the one below:

https://public.tableau.com/app/profile/dinushki.de.livera/viz/TheFirebirdSuiteInfernalDance/Firebird