Recently, I have been using SQL, this is a coding language that works with databases such as Snowflake. This is a widespread business tool that allows a user to:

- Execute queries to retrieve data

- Insert, update and delete data

- Create new databases, tables, views and stored procedures

- Set permissions

When learning a new skill, it is always important to practice, practice and practice! Thus, I have been using the Preppin' Data Challenges to hone my skills. These are weekly exercises to help you learn and develop data preparation skills. The challenges are designed for learning Tableau Prep but they can be completed in R, Python, SQL, DBT, EasyMorph and many other tools.

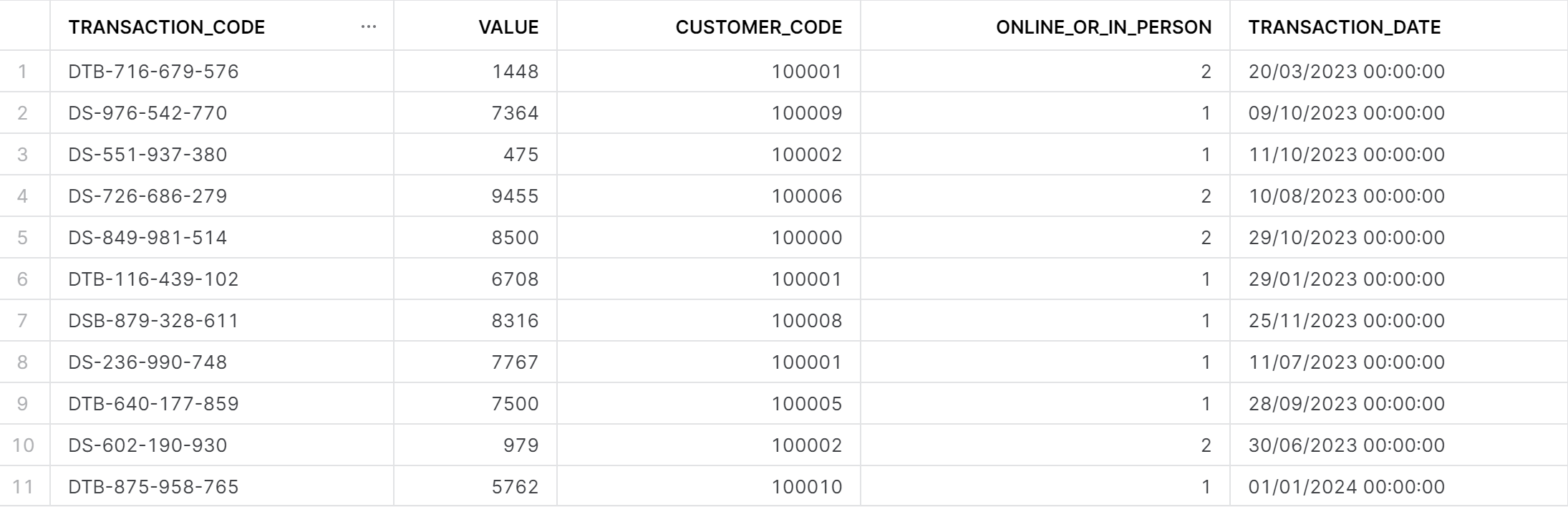

To start, I will be completing challenge 2023- Week 1. This challenge involves transaction data from a fake bank that requires cleaning. The link to the challenge is: https://preppindata.blogspot.com/2023/01/2023-week-1-data-source-bank.html

We have multiple requirements when preparing this dataset.

- First, we need to split the Transaction Code to extract the letters at the start of the transaction code. These identify the bank who processes the transaction.

- We also need to rename the new field with the Bank code 'Bank'.

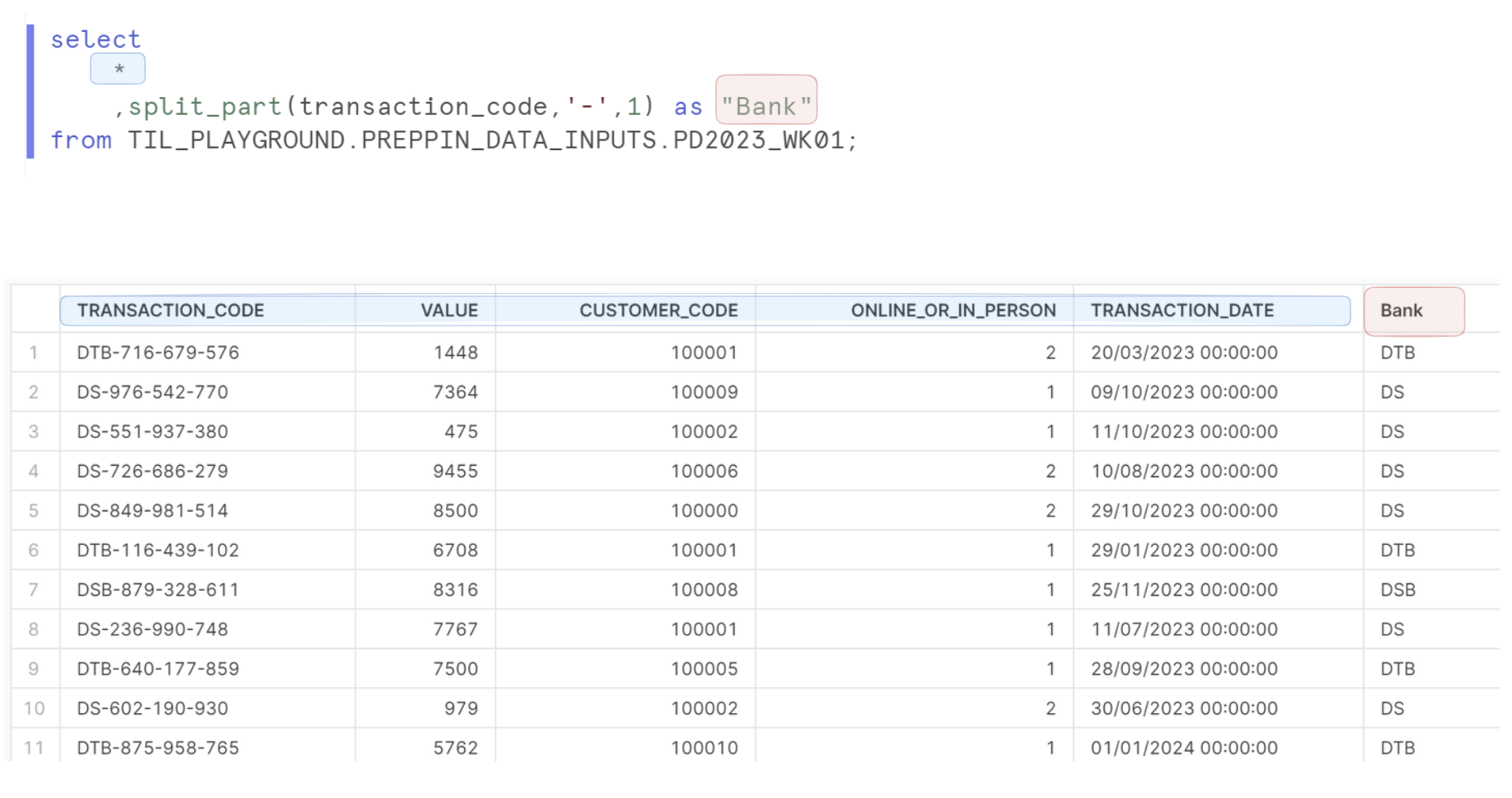

The select syntax tells the database what columns we want. Selecting a * will bring every column from the dataset. We also want a new column of the bank names, this requires a split at the '-'. We only want the first split so there is a 1 afterwards.

When aliasing/renaming columns you add an as followed by the new name. If you want the name to be case sensitive, this needs to be put in " ". However capital letters and underscores also work:

- select OLD_COLUMN as "New Column" -> New Column

- select OLD_COLUMN as new_column -> NEW_COLUMN

To complete the challenge, different levels of detail are required in the outputs. We can separate the outputs using semi-colons. We will need to sum up the values of the transactions in three ways:

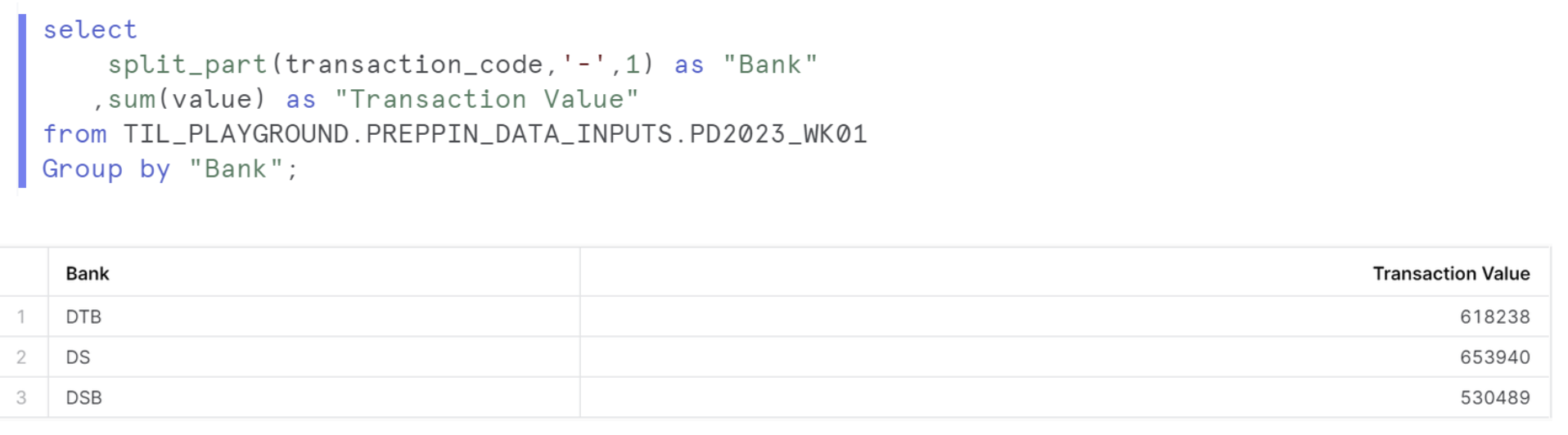

1. Total Values of Transactions by each bank

This is similar to our first step getting the Bank field. To find the total transaction value we need to add up or sum the value column together. I also renamed this to "Transaction Value". To find the totals for each bank we need to do a group by. Grouping by "Bank" will change the dataset to only have a row for each bank.

The query has to be structed in a certain way so the Group by occurs after the from because the data is pulled in (from), then aggregated (group by).

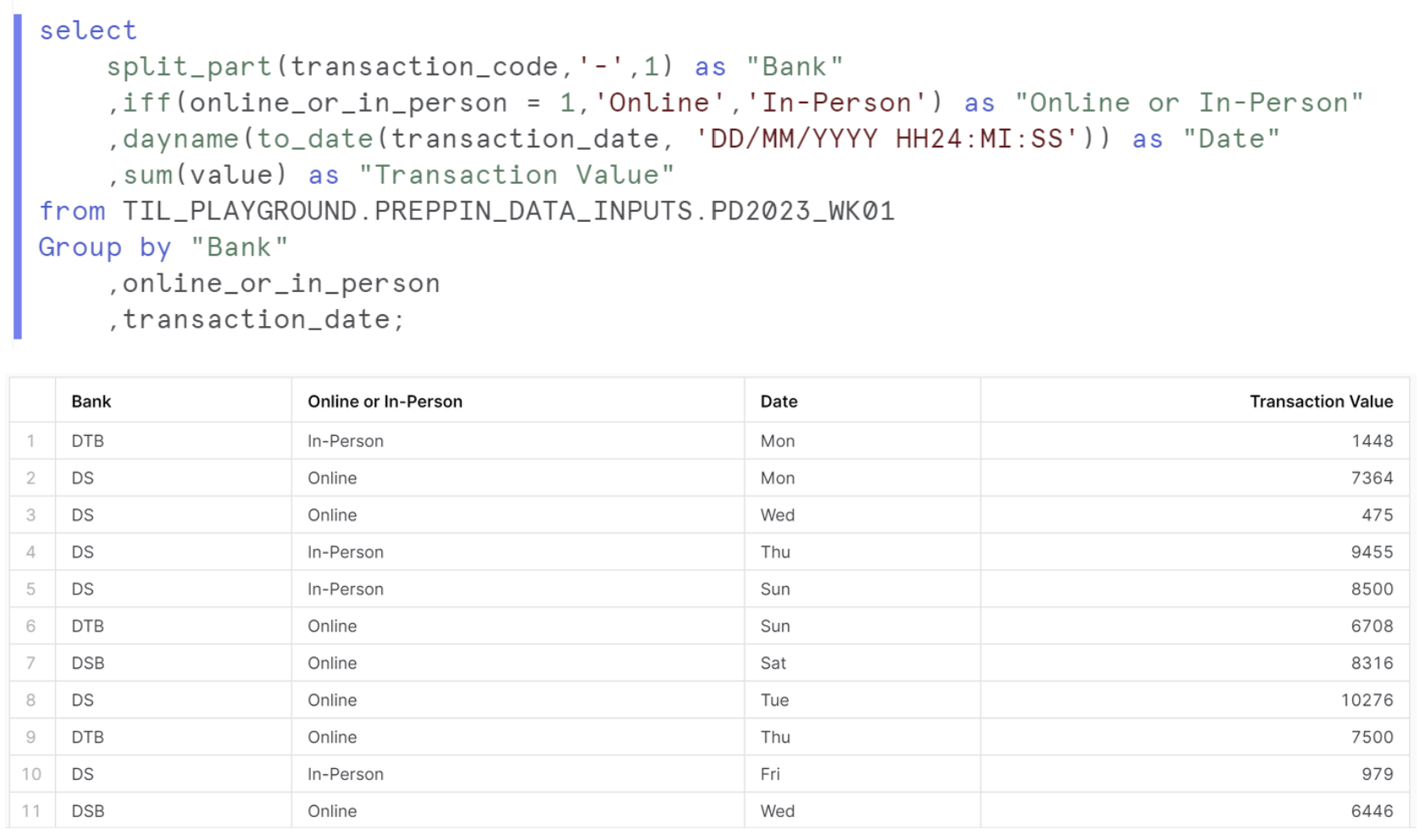

2. Total Values by Bank, Day of the Week and Type of Transaction (Online or In-Person)

The bank column is the same as before. We need to rename the values in the Online or In-person field, Online of the 1 values and In-Person for the 2 values. I did this with an iff statement that will change all 1s to 'Online' and everything else to 'In-Person', I then renamed the column.

To change TRANSACTION_DATE to the days of the week we need to do two steps.

1. First, Snowflake read this column as a string so we need to change this to a datetime data type. This uses the to_date function, but we also need to format this new date so it is read correctly, thus we add 'DD/MM/YYYY HH24:MI:SS' after.

2. We now change this date to the day of the week, so we wrap the to_date function in a dayname function. The whole expression is renamed to "Date".

Finally, we add up all the transactions using sum(value).

To find the Total Values by Bank, Day of the Week and Type of Transaction, we group by Bank, Online or In-Person and date.

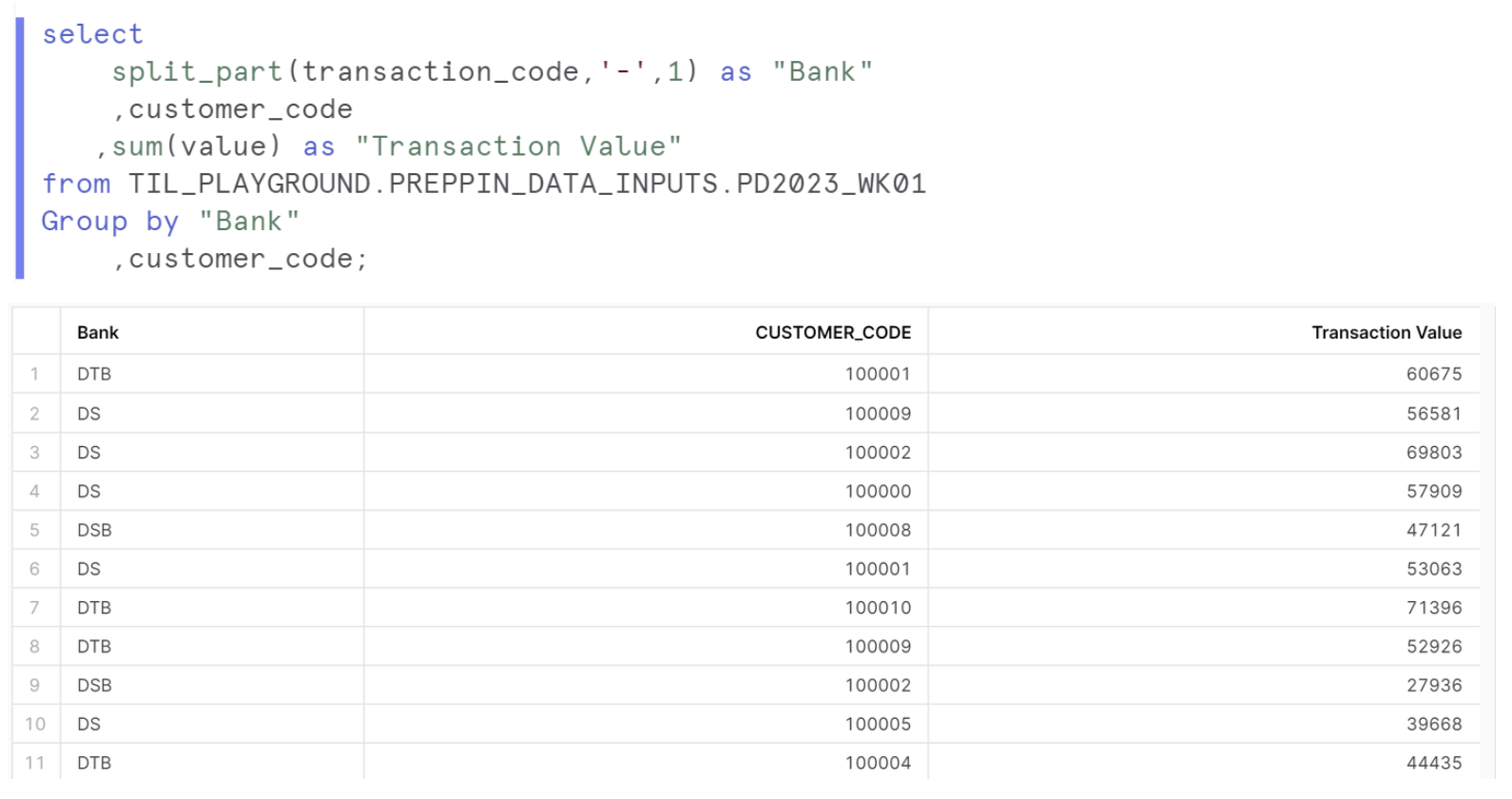

3. Total Values by Bank and Customer Code

Finally, this output repeats our previous steps. We bring (select) in our bank, customer code and transaction value columns. We then group by bank and customer code.

And that's everything! This Preppin' data challenge is useful for practicing date functions and group by aggregations!