An awareness of database structures is important contextual knowledge for data engineering. One of the key principles when thinking about database design is normalization, an approach to organizing data. This blog will introduce normalization and denormalization, discussing their strengths and weaknesses along with an approach to balancing them.

Normalization

Reduce Data Redundancy

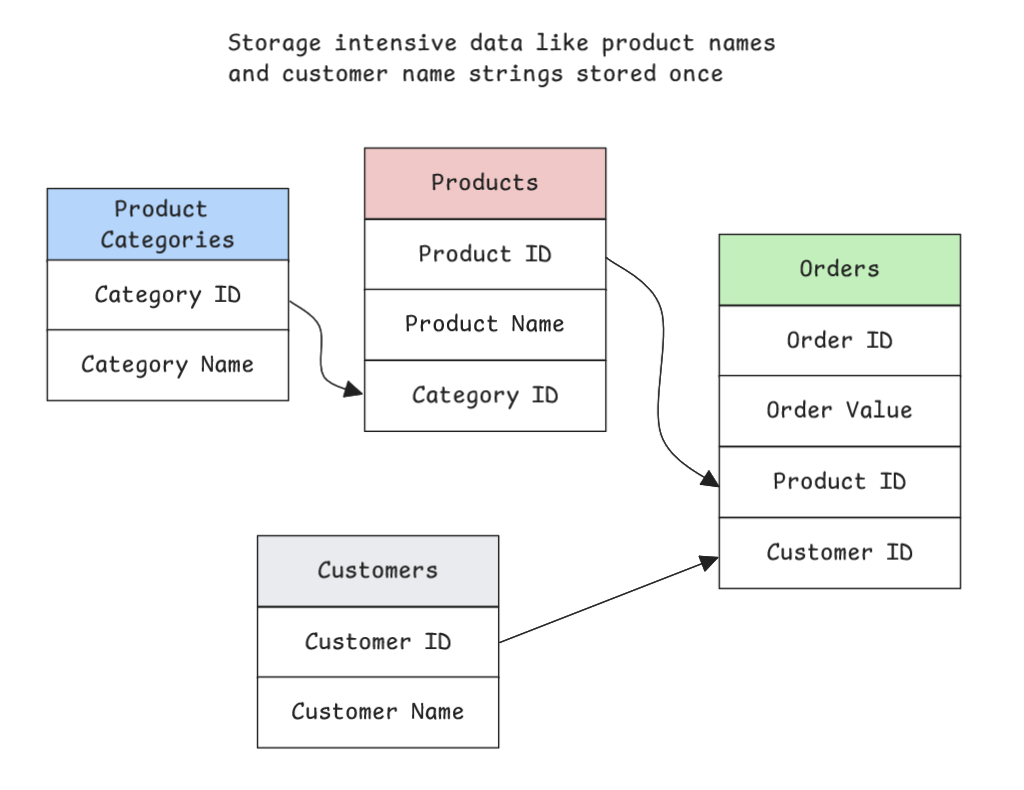

The primary motivation for normalizing data is to reduce data redundancy - i.e. in a smaller more focused tables one piece of data is stored in only one place to avoid the same data being stored in multiple tables.

Simplify Data Updates & Protect Data Integrity

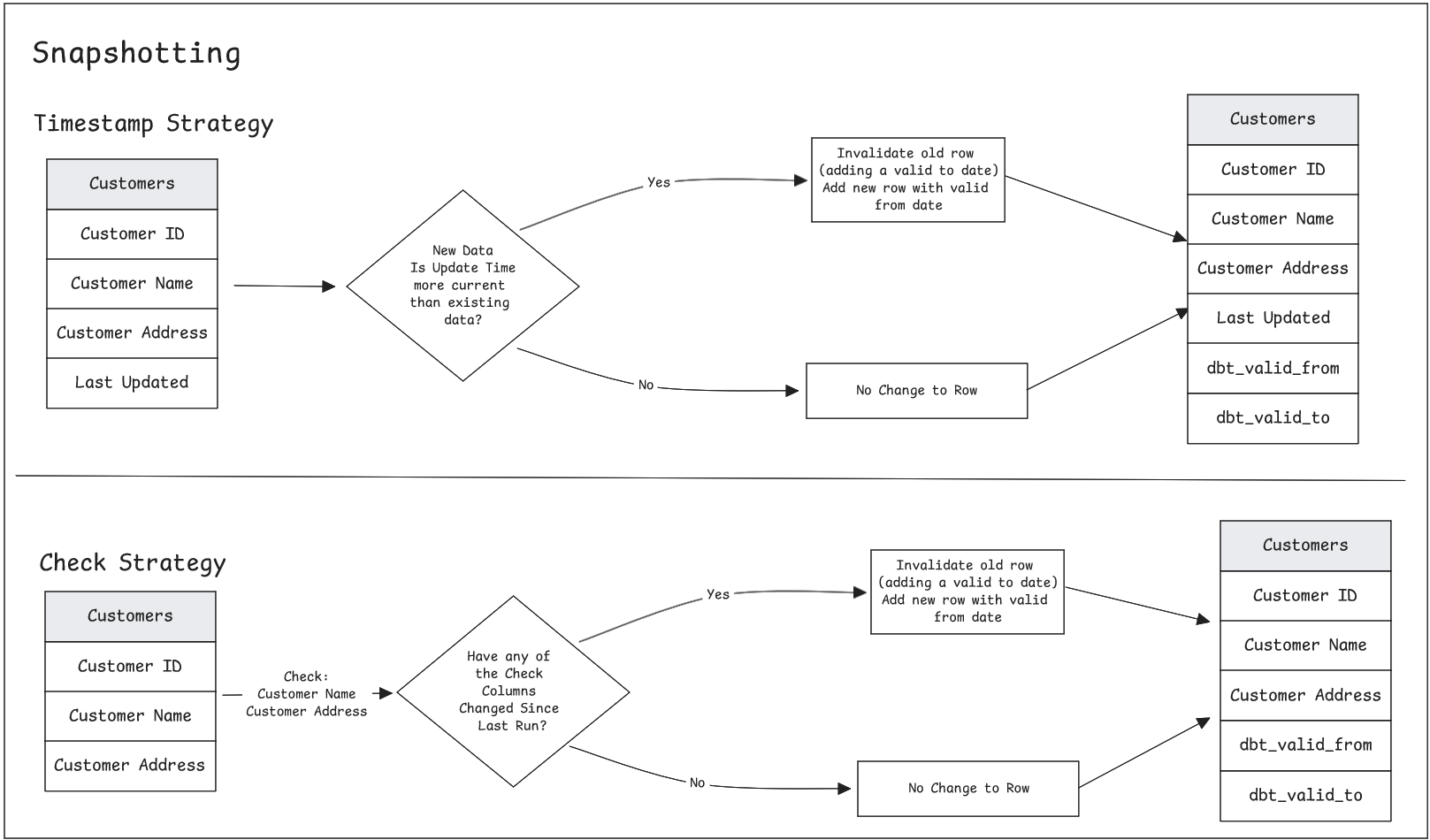

Other benefits of this arrangement include protecting data integrity - pipelines often include update/insert and deletion processes to keep data up to date. For example, monitoring slowly changing dimensions (SCDs) like a customer address. Without a normalized database structure these processes are often more complicated and the risk of inconsistencies is potentially increased. Storing dimensions in separate tables allows the transactional tables to be updated simply with more rows for the new data and any changes that occur to the dimension tables over time can be tracked and monitored with a feature like Snapshotting in dbt.

Structured Database with a Schema to Navigate

The final benefit worth mentioning is that with the data organized logically and relationships between tables mapped, navigating across the the database to identify and query the data needed can be easier. This relies on the database documentation and design being to a high level but by storing data in separate areas with clear documentation

OLTP and Normalization

As such normalized structure is often used in an Online Transactional Processing system (OLTP). This system is transaction orientated (think till data for a store, or logging website traffic). In real-time, large numbers of transactions need to be recorded and update/insert existing tables. Write speed is the priority here and storing quality data.

Denormalization

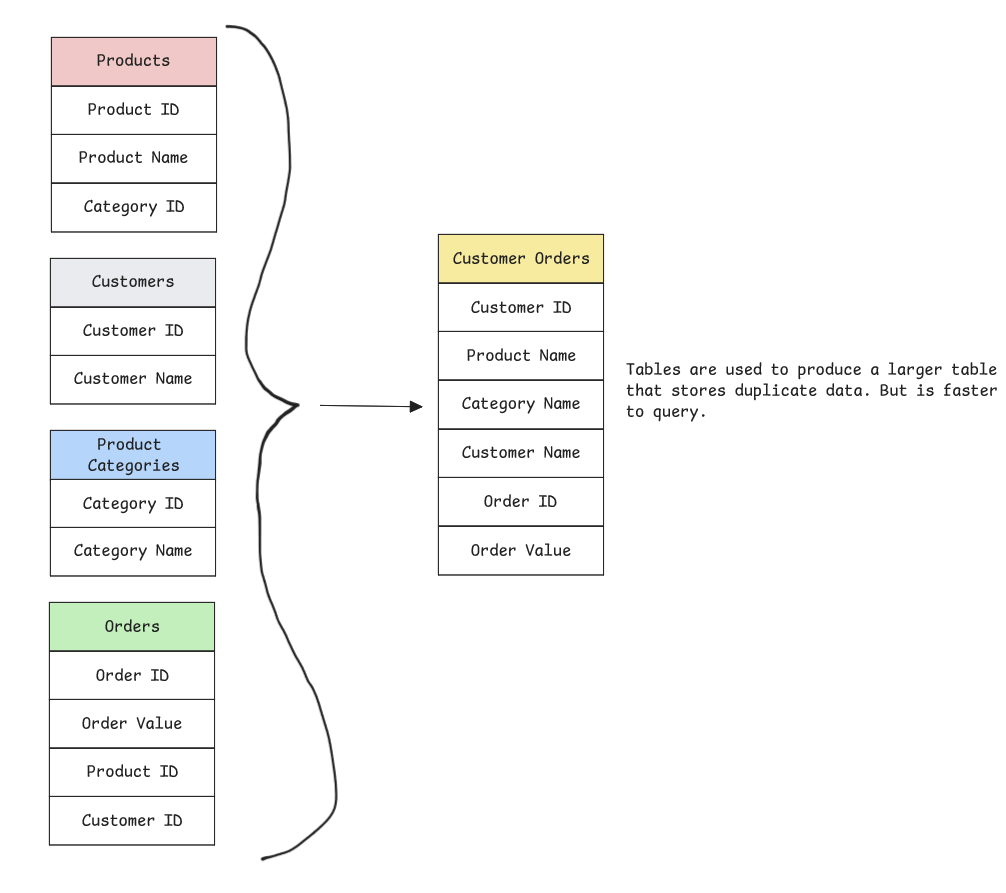

Having explored normalized structure it makes sense to contrast it with the alternative denormalized structure.

Reduce Joins Increase Query Speed

This structure's primary benefit is query speed for analysis and exploration. By trading off the benefits in write speed for update/insert we get a table with the information required for analysis readily usable.

Simpler Queries for Analysts

Furthermore, reducing the need for multiple join clauses simplifies queries making the tables more accessible to analysts with limited SQL knowledge.

Denormalization and OLAP

Given these benefits denormalization makes sense in an Online Analytical Processing Database system (OLAP). This type of system prioritizes search and analysis which is faster on the denormalized tables which might also include pre-aggregated data. Moreover OLAP systems typically have less frequent data updates than an OLTP system meaning that its drawbacks in the update process area are less problematic.

The old adage 'normalize until it hurts, denormalize until it works'

This adage recommends a balanced approach to database design - starting by fully normalizing a database to give a clean and consistent foundation with minimal redundancy. From this foundation by observing and monitoring database usage bottlenecks and repeatedly queried information can be identified and aspects can be denormalized to optimize the querying of the database.