This blog outlines the process and key steps taken to set up a scheduled data extraction using Python and GitHub actions. I've done this for a personal project where I fetch data using the Reddit API, perform a sentiment analysis on the top posts and send the data to Snowflake. I've then used GitHub actions to schedule this send to occur every week. Once I have a few months of data I intend to visualise this sentiment analysis alongside a timeline of world events to see how these affect the sentiment of the Reddit public.

Fetching Data from the Reddit API

Having experience calling APIs in Alteryx I decided to use Python this time. The first decision to make was which IDE I was going to use. I went with PyCharm because I find it useful that it creates a virtual environment for each project with an integrated terminal. I also like the UI that allows you to easily install packages and access the advanced debugger.

When writing the code I used ChatGPT to help with some of the API specifics. I find ChatGPT can offer a concise version of the documentation when asked questions in the right way. I also used the PyCharm debugger to help with any errors. Here are a few key learnings from writing this code:

1) Install any required packages (praw, vadersentiment etc).

2) Make sure to hide credentials using environment variables.

3) Include documentation within the code so you can look back and see what each section is trying to do.

4) If using ChatGPT make sure you understand each line that it gives you. Copy and pasting code without understanding it can make it impossible to debug if you later have any issues.

Sending the data to Snowflake

Once I was able to fetch the data using the Reddit API, the next step was sending it to Snowflake. I chose an easier route of creating the table first in Snowflake before inputting the data as I knew what fields and data types I needed (I believe there's also a dynamic way of doing this using staging where a table is created based on the data that you're pushing to Snowflake).

Writing the code for this was similar to writing the code for the Reddit API. I used ChatGPT to digest the documentation, hid passwords etc with environmental variables and made sure to install any packages. I also needed to convert a date field into the correct format for Snowflake.

Scheduling the script using GitHub Actions

Now I have a code that fetches the data I require and sends it to Snowflake the next step is scheduling this to occur regularly. I decided to do this using GitHub actions as it offers a relatively easy way to do this with plenty of documentation, enables version control and allows me to share my code with others and build my portfolio.

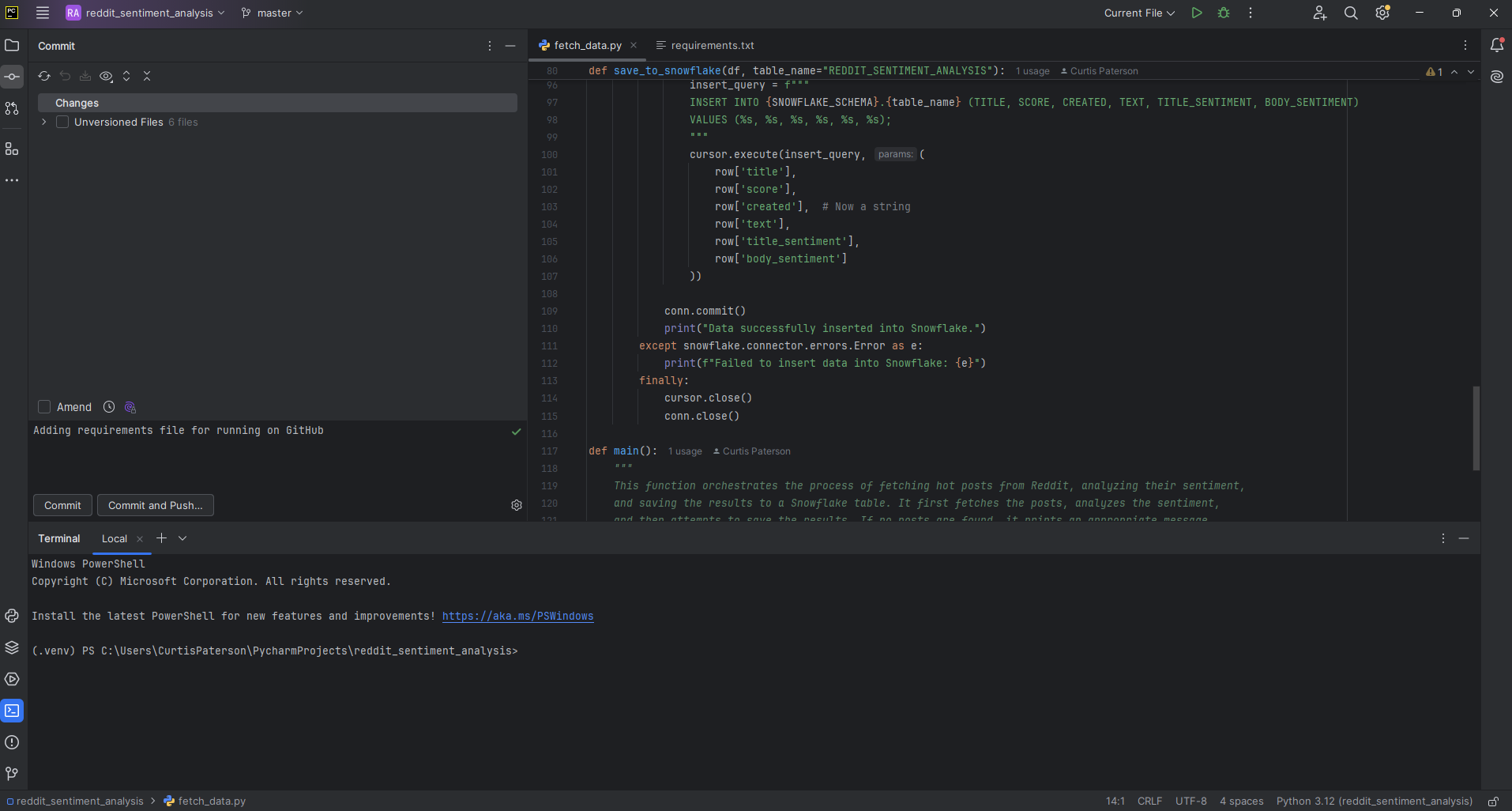

PyCharm has Git and GitHub integration that allows you to push your initial code and any additional changes to your GitHub repository. It also allows version control where you can add comments explaining any changes that have been made, and review these changes yourself or by others before pushing them to your main branch. However, there are a few things you need to look out for before you can get this working.

1) All environmental variables used in PyCharm will also need to be added to the GitHub repository settings.

2) A requirements file detailing all the packages and their versions needs to be included in the GitHub repository. PyCharm has an automated function that creates this file for you based on your code.

3) To schedule the script, you need to create a workflow. Firstly, create a folder in the repository named .github/workflows then within this create a yaml file that includes the schedule details as well as dependencies and environmental variables. This workflow should then appear within your actions tab where it can be manually run to test it's working (can only be manually run if the yaml includes the workflow_dispatch event trigger).

Conclusion

Although this took a while to set up and had a few issues, mainly around ensuring the environment variables were included everywhere and that the code was correct, it ultimately provided a great solution. I now have a reliable and scheduled code that runs automatically and has version control enabled for any future changes. I can go back into the original code and make any amendments if required and will receive an email notification if there are any errors.