Today I’ve been learning all about webscraping. For those who don’t know, webscraping is a way of extracting information from a website programmatically, and it’s worth mentioning that this is not permitted for every website. I started by looking at a very simple way of doing this by using the =IMPORTHTML() function in Google Sheets (this won’t work in Excel) and went on to look at some not-so-simple ways of webscraping in Alteryx.

The first part of webscraping is finding the right website to use. I decided to go with theathletic.com, specifically looking at Middlesbrough FC’s stats for the season. I chose this website as it contained one simple table, including player names and the corresponding player performance stats. The website was simple, without any tabs and using the element inspector (ctrl + shift + i) I could see that the table had a clear start and end tag and all the table data was present. The next step was to copy the website URL and add a Text Input to a new Alteryx workflow. I copied the URL into the first row of the Text Input and named the field URL. I then added a download tool after the Text Input and made sure to set the select field as the newly created URL field. I added a Select Tool after the Download Tool and right-clicked the Select Tool and selected cache and run. This is a handy way of saving processing power and time as the workflow will remain ran up until and including this Select Tool.

I was then left with a simple table with a single cell (DownloadData) containing the entire code for the webpage. It was pretty daunting at first to imagine how I could extract anything useful from all this text so I started to try and filter the text to only show me the text which corresponded to the table I wanted to look at. I added a Data Cleansing tool to remove any leading and trailing whitespace, split the cell into rows on every space and used a Multi-Row Formula to create a new column labelling each row associated with the target table as 1. I did this using the following formula where ‘outfield’ was the starting and </table>’ the ending tags for the table as discovered using the element inspector on the web page.:

IF CONTAINS([DownloadData],'outfield')

THEN [Row-1:Table ID]+1

ELSEIF CONTAINS([DownloadData],'</table>') THEN 0

ELSE [Row-1:Table ID]

ENDIF

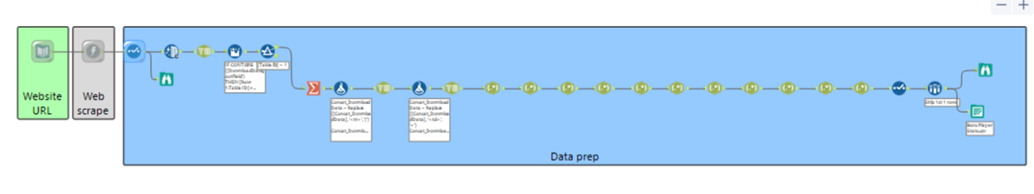

I then filtered to only include table IDs of 1. The data seemed much more manageable now and I’d realised that there were more tags within the table, with <tr> at the start of each new row and <td> at the start of each column. I used a Formula Tool to replace each <tr> with a | and each <td> with a ~ to enable me to use these tags as delimiters. I then used a Text to Columns Tool set to text to columns using ~ as a delimiter and text to rows using | as a delimiter. I now had all the information I needed in the correct tabular format however, I also had a load of other text that I didn’t want in each cell. I decided to use a series of simple parse REGEX in order to extract the information I needed although, there may have been a quicker way to do that. Here’s the whole workflow that I ended up with: