Every time you access a website, you are sending a request to a server and that server then sends you back a response. That response is the HTML/CSS/JavaScript we talked about in Part I. Another way that you can send requests to a website rather than simply visiting the page, is through an API or Application Programming Interface.

APIs are essentially a defined method of communicating between software components, in this instance it’s between you (in Alteryx) and the website. When you make a request to an API, you are still going to get a response, it’s just this time you get it in a file format rather than rendering as an all-singing, all-dancing webpage. The most common file types are JSON and XML, both of which Alteryx have a helpful tools for parsing out the data from both these file formats.

APIs can be pretty complicated, and there’s lots you could potentially dig into around types/methods/authentication and so on. The absolute best place you can start when you are thinking about accessing an API is in the documentation. This is where you will should find all the information about what information you can get from the API, how much and how often. Some websites will limit the rate or the amount you can access, but that should all be in the documentation.

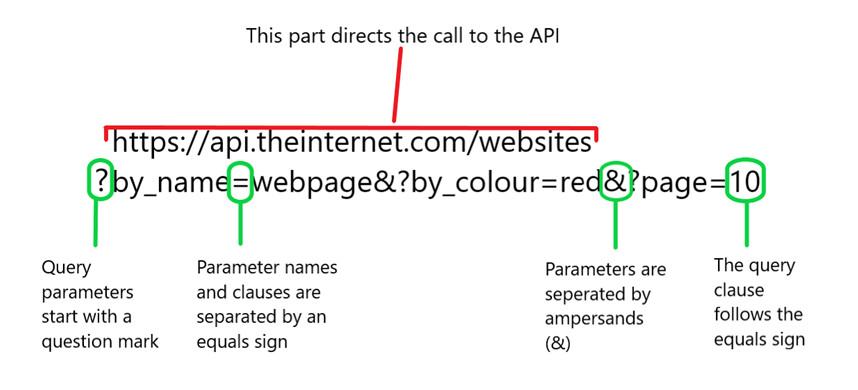

You will also be able to find information about the query string parameters which you are able to manipulate to extract your data. You’ve probably seen them in URLs before, they tell the website where to go to get the information/what information you want. So, for example:

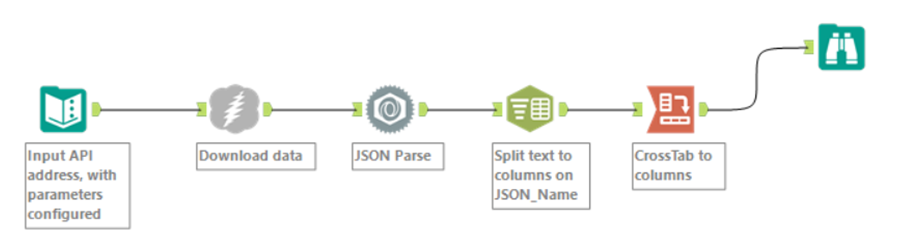

A simple workflow for requesting a single page from an API follows. What you get out, will be a series of columns with data, the size and scope of which will depend on the API and the query string parameters you have set.

It’s also possible to paginate through multiple pages of an API, all you need to do is update the page parameter where you see it. This can be done by generating rows with a new count, ensuring both fields are strings with a select tool, then adding the row count to the page parameter with regex:

There’s loads of APIs you can play around on, and the best way to go about starting is to find something that interests and then just have a go. You can switch around your parameters, download the data, and pretty soon you’ll be parsing with the best of them.

Stayed tuned for the final instalment on what to do when there’s no API (spoiler alert: it’s web scraping).